|

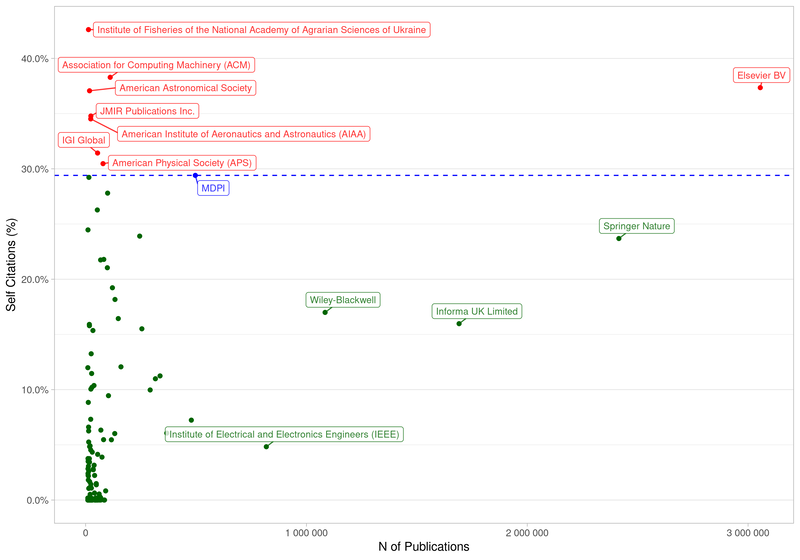

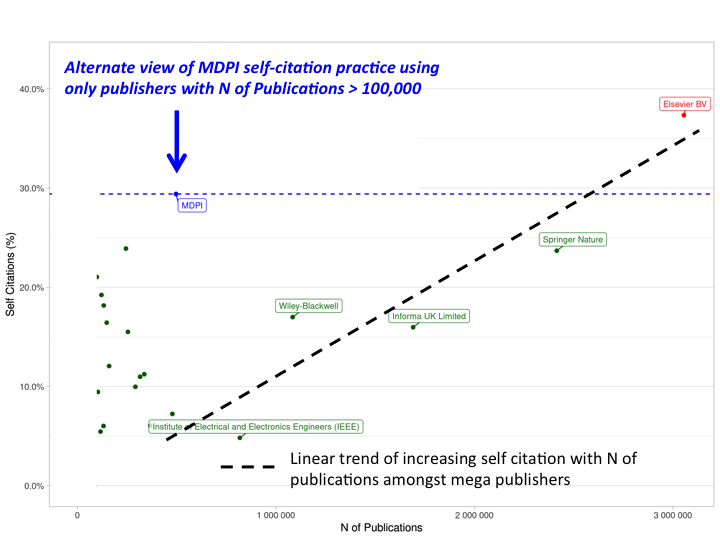

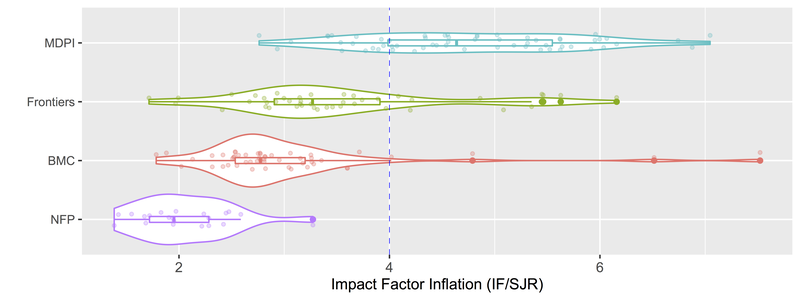

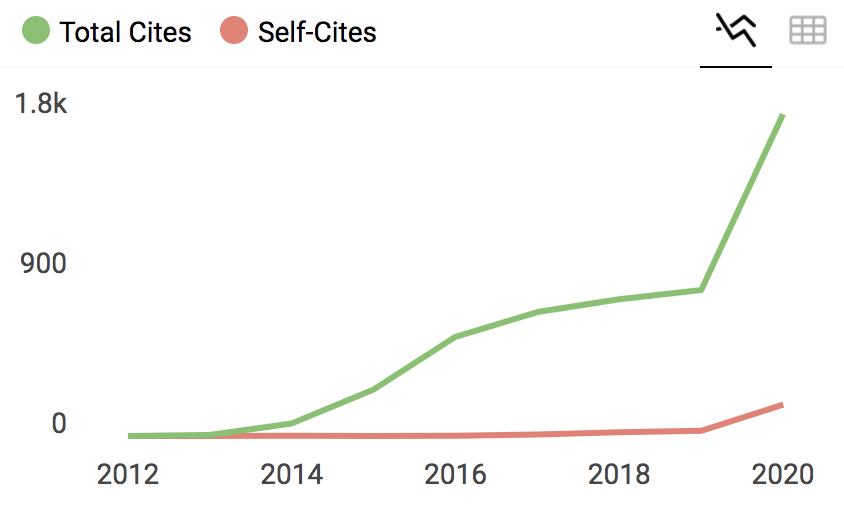

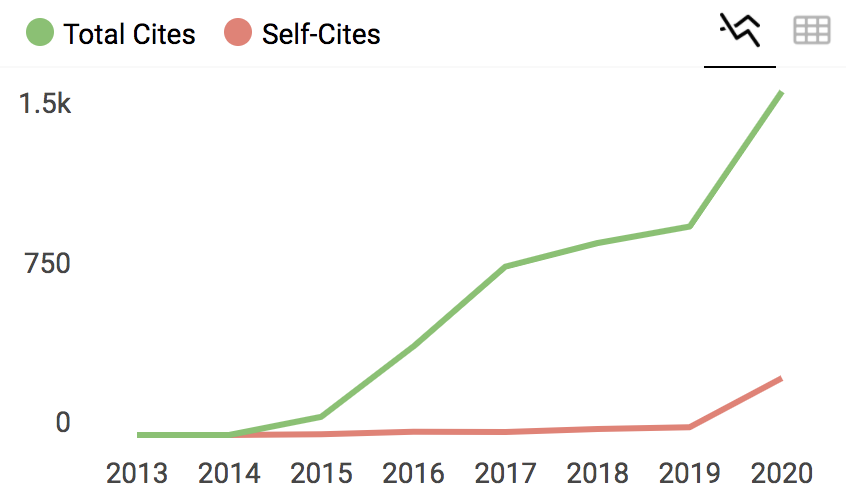

Reading time 10 min TL;DR: I re-ran the IF/SJR analysis focused on publishers highlighted by MDPI as contemporaries with very high self-citation rate. Many of those publishing groups included non-profit professional societies. Like other non-profits, their IF/SJR were very low and drastically different from MDPI. This also brought IGI Global into the mix, which has been referred to as a "vanity press" publisher that contributes little beyond pumping up people's CVs with obscure academic book chapters. IGI Global and my control predatory publisher (Bentham) were the only groups with an IF/SJR comparable to MDPI. I recently shared some thoughts on what defines a modern predatory publisher. In that post (see here [1]), I defined five red flags that act as markers of predatory behaviour. These were: #1) a history of controversy over rigour of peer review, #2) rapid and homogenous article processing times, #3) Frequent email spam to submit papers to special editions or invitations to be an editor, #4) low diversity of journals citing works by a publisher, and #5) high rates of self-citation within a publisher's journal network. Overall, the post seems to have been well-received (I like to think!). One tool I proposed to help spot predatory publishing behaviour was to use the difference in how Impact Factor (IF) and Scimago Journal Rank (SJR) metrics were calculated to expose publishers with either: #4) low diversity of journals citing works by a publisher and/or #5) high rates of self-citation within a publisher's journal network. I'll reiterate that one should really judge all five red flags in making conclusions about a publisher, but today I'll focus on the IF/SJR metric. My motivation in using the IF/SJR ratio was to try and overcome my own biases to see if there really was a quantifiable difference in citation behaviours of reputable vs. controversial publishers. Ultimately, there was a significant difference in IF/SJR between non-profit publishing groups and open access publishers like BMC, Frontiers, and MDPI. A striking finding was just how much of an outlier MDPI was, with an IF/SJR similar to Bentham Open - a known predatory publisher (MDPI: Bentham p-adj = 0.12). "Why do you keep on picking on MDPI?" It seems no matter how one looks at predatory publisher behaviours, the conversation eventually turns to MDPI these days. There's just a lot of really good information out there analysing their publishing practice (e.g. see here [2] or here [3]). Even proper peer-reviewed research has used MDPI as a case study for predatory behaviours, including analysis of the MDPI journal "Sustainability" (Copiello, 2019) [4], and recently Oviedo-Garcia (2021) [5] showed excessive rates of self-citation across MDPI journals; in fairness, Oviedo-Garcia (2021) currently has an expression of concern attached with investigation ongoing. Excessive self-citation can reflect an abuse of publication metrics to artificially increase a publisher's Impact Factor. MDPI recognized the serious nature of these self-citation accusations. In an official response to Oviedo-Garcia (2021) [6], MDPI defended themselves with the following chart, saying: MDPI: "It can be seen that MDPI is in-line with other publishers, and that its self-citation index is lower than that of many others; on the other hand, its self-citation index is higher than some others." Is MDPI really "in-line" with other publishers though? I have attached a lightly annotated modification to their figure, censoring any publishers under 100k N of publications. This really exposes just how much of an outlier MDPI is compared to smaller publishers, but also larger mega publishers who follow a linear trend in self-citation rate vs. N of publications. I also included my IF/SJR analysis I ran back in August (details here) to emphasize just how much of an outlier MDPI was even from Frontiers (MDPI:Frontiers p-adj < 1e-7). Importantly, I will take a moment to emphasize that individual journals within a publishing group can be judged on their own merits. There is a lot of heterogeneity within publishing groups for academic practice, and this is also seen in the IF/SJR metric. But I will make explicit here that this is a conversation of publisher groups as a whole. A few good apples does not mean the batch isn't spoiled. But the brilliant part of having IF/SJR is that it can act as an objective litmus test for a specific journal if you are approached to review for them. "Perhaps _______ explains MDPI's high self-citation rate?" In response to my last post, a few folks commented that MDPI's large number of Special Issues might underlie its high self-citation rate. Special Issues contain editorial pieces and articles that cite within the issue, providing a mechanism to explain rates of self-citation. That said: that's an entirely neutral observation re: justification of self-citation rates. After all, MDPI really went off the rails with Special Issues, publishing over 30 times more Special Issues in 2021 than in 2016 (with many articles per Special Issue) [2]. It's debatable how useful such explosive Special Issue growth actually is for scientific progress [2,3]. That said, the impact of Special Issues on citation count is also undoubtedly inflating MDPI journals' Impact Factors: Figure: proportion of self-citations to total citations for Vaccines (left) and Pathogens (right). There was a spike in 2020 of self-citations, which may be coming from the explosion in MDPI special issues between just 2020 and 2021 [2]. Note this is strictly citations to the exact same journal, and not citations within overall publishing group. As of 2020, Vaccines and Pathogens were two of MDPI's 'better' outliers in terms of IF/SJR, at 3.41 and 3.55 respectively. A second comment I got was how MDPI, thanks to its large umbrella of journals, likely had an exaggerated IF/SJR due to publishing on niche topics. On the surface this argument might seem intuitive, but to me this doesn't explain why BMC or Frontiers have such drastically different ratios of IF/SJR; both are similar open access mega publishing groups with diverse journals. Special Issues could obviously play a role (but see above). I decided to test this idea a bit using the publishers MDPI themselves highlighted as contemporaries with high rates of self-citation. Surely if MDPI is comparable to other publishers, then other publishers with niche interests and high self-citation rates should also have disproportionately high IF/SJR ratios, right? Analysis of IF/SJR for highly self-citing publishers To test how high self-citation rate impacts IF/SJR, and whether niche topic might unfairly bias IF/SJR, I collected the IF/SJR ratios of the publishing groups highlighted by MDPI for their high self-citation rates (see earlier figure). Here's the list of publishers I looked at: Publishers highlighted by MDPI for having higher self-citation rates

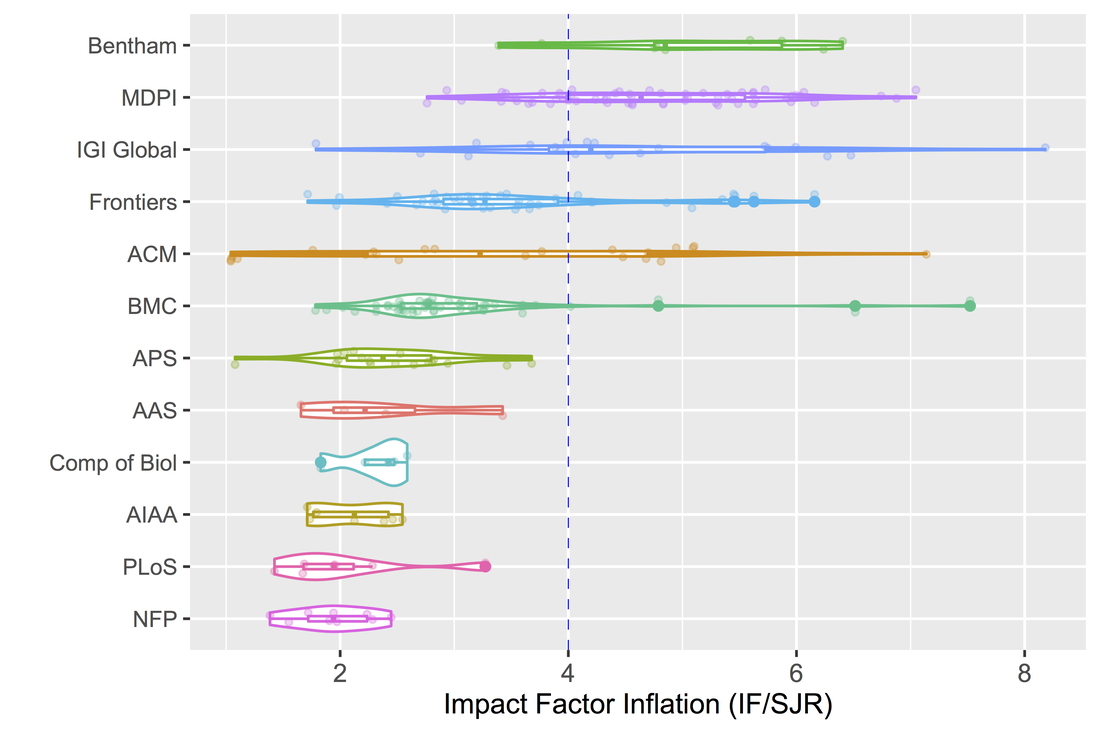

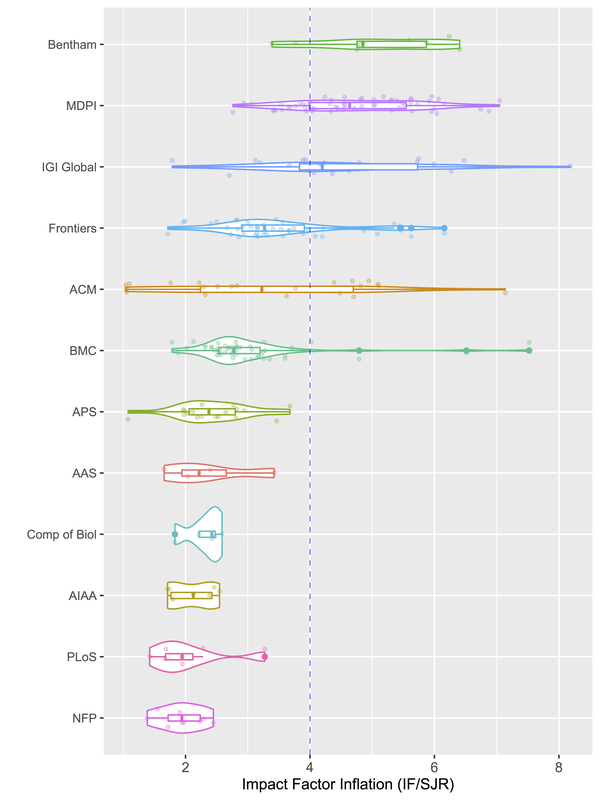

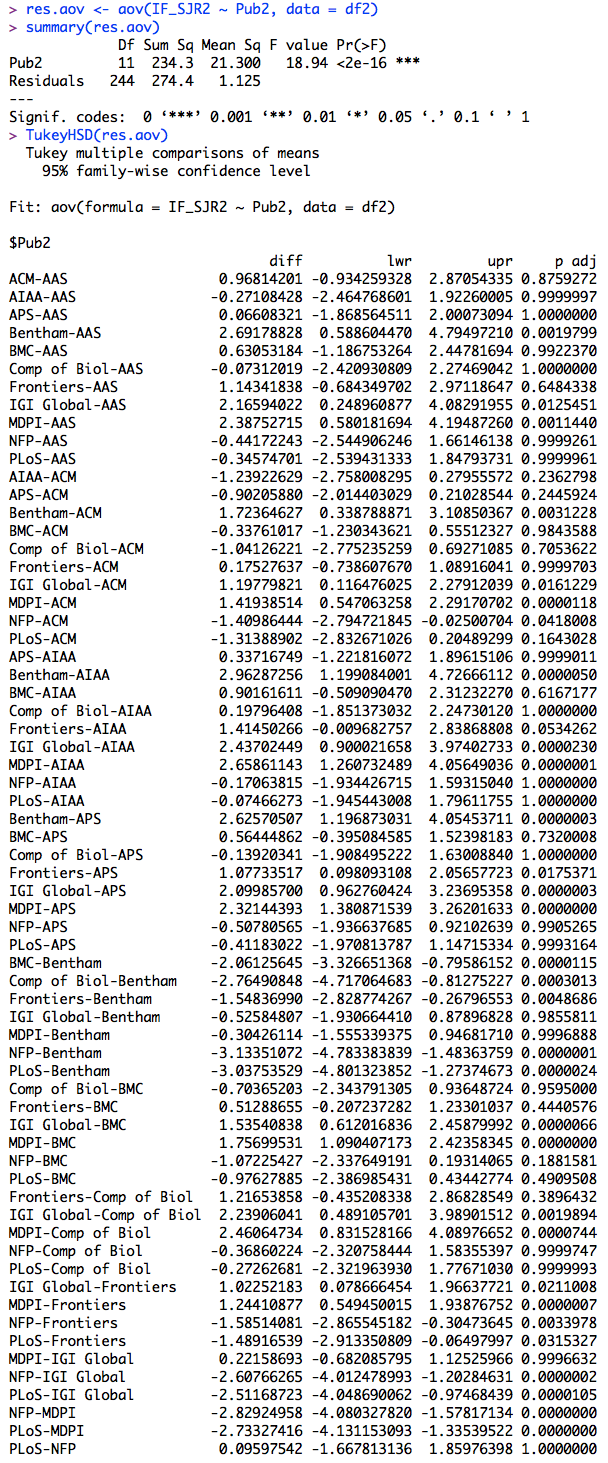

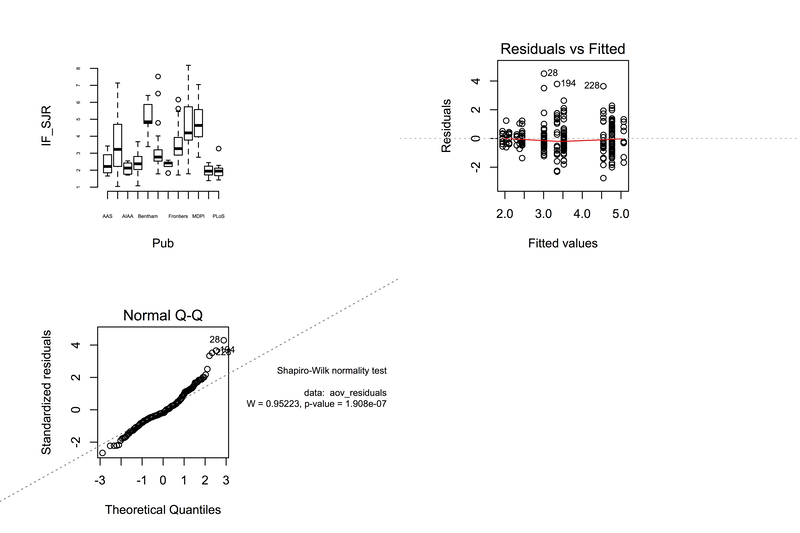

Caveats to note: i) not all journals had SJR entries for 2020; these journals were excluded from the analysis. ii) Some publisher groups were excluded because they weren't indexed by Scimago or Clarivate. iii) I only collected SJR data from a random selection of ACM and AIAA journals. Both publishers had many, many indexed publications from conference proceedings (not peer-reviewed), which I excluded for hopefully obvious reasons. Full details at the end of this post. Without further ado... "Highly self-citing" non-profit journals nevertheless avoid inflating their IF/SJR ratios As you can see, almost every other publisher has a low IF/SJR more in line with not-for-profit publishers (NFPs). That actually makes sense, given that many of those publishers ARE not-for-profit organizations (AIAA, ACM, APS, AAS). This is true across disciplines: from biology to space exploration, from computation to chemistry to physics. This is really crucial to demonstrating the value of the IF/SJR comparison. This is also intuitive, as Scimago Journal Rank was specifically designed as a metric that helped normalize a journal's impact score within discipline [7]. This really emphasizes how SJR is also driven by the diversity of other journals citing works by that publisher! Thus even if a publisher is highly self-citing, if they are publishing useful information, others will cite their work. That will increase their SJR, keeping their IF/SJR in check. Likewise, high rates of self-citation drastically inflate a journal's IF only if that journal is publishing enough self-citing articles to actually sway their IF meaningfully relative to the total number of citations they receive. I swear I am just trying to be objective here The only publisher amongst those highlighted by MDPI that was really comparable in terms of IF/SJR was IGI Global. You may have heard of IGI Global before, probably via your email inbox inviting you to publish a book, or contribute an article to "the International Journal of..." Yes... IGI Global is ALSO a controversial publishing group. They are specifically listed as a "Vanity Press" outlet by beallslist.net, and are described as a "Vampire Press", or as "Rogue Book Publishers" in Eriksson and Helgesson (2017) for being a "write-only" press that is basically used solely to pad a CV with a book chapter [8,9]. Incredibly IGI Global doesn't have even an English Wikipedia page, which was deleted in 2017 after community consensus that the Publisher was so minor and disreputable as to not merit an article at all (see here). Indeed only MDPI and IGI Global were comparable to the control predatory publisher Bentham Open (IGI:Bentham p-adj > 0.10). Details from this analysis are provided below: Figure: output from IF/SJR analysis. Left) violin plots, expanded a bit to better see data points. Middle) One-way ANOVA + Tukey's HSD multiple comparisons. Right) Residuals and distribution normality (which... it's not normal. But one-way ANOVA is fairly robust to violations of normality [10], and the raw data are visible in the left plot and can be downloaded at the end of this article). Conclusion: not changed This dive into additional publisher IF/SJRs really only reinforced how much of an outlier MDPI is in its citation behaviour. It also newly confirms that another publisher famous for predatory behaviour (IGI Global) has IF/SJR comparable to MDPI and Bentham Open. Despite other publishers having a diversity of disciplines, niches, and self-citation rates, aggressive rent extractors like MDPI and IGI Global continue to have significantly different citation behaviours. Once again, IF/SJR is a tool. I am proposing here that IF/SJR can help illuminate journals with egregious self-aggrandizing citation behaviour; and even publishers if they host many self-aggrandizing journals. IF/SJR relies on self-citation rate (increasing IF), but just as important is the ability of publishers to put out good work that attracts citations from other groups (increasing SJR). The totality of a publisher's behaviour: its history of controversy (red flag #1) , the conditions it sets for peer review (red flag #2), its reputation for spam (red flag #3), and egregious citation behaviour (red flags #4, #5)... all of these are important in determining reputation. But what we call disreputable publishers is really just semantics: "aggressive rent extractors", "vanity publishers", "predators" - these are just names. What's important is how they help advance scientific progress... if they help at all. References

Additional analysis details First off, here's the raw data:

Now then: I didn't want to go off on too big a tangent mid-article, but there are some important details to the IF/SJR analysis above that I wanted to note for posterity: Going through journals from these publishers, I found some data types that were... difficult to include. I'll try to explain these issues below. First: the Ukranian Academy Fisheries publisher... didn't strictly publish in English. Not only could I not find IF/SJR data for this publisher, but also I have to imagine that non-English niche publishing groups are a bit excused in their rates of self-citation. This isn't really a comparable publishing group to the other publishers in the analysis. Second: the Association for Computing Machinery (ACM) and American Institute of Aeronautics and Astronautics (AIAA) host a number of conferences and their SJR entries were overwhelmed by annual conference proceedings mixed into peer-reviewed journals (for instance, see search term: here). Conference proceedings do not undergo the same peer review process as journal articles, do not typically receive impact factors, and were also only sporadically indexed by Scimago from year-to-year. Conference proceedings also typically cite far, far fewer external sources, and editorial pieces will cite their conference proceedings directly (inflating self-citation rate). Given all these reasons, I removed all the conference proceeding publications from the analysis, and tried to keep only ACM and AIAA publications run by actual peer-reviewed journals. This required manual curation strategies. For ACM, I only looked through the first 50 entries on Scimago and then cross-referenced these for whether they had an Impact Factor in 2020. This may have been imperfect as a methodology. In the end, I still came out with 22 ACM journals, so I feel like this should at least be a decent sampling. There were 424 entries for AIAA, with the vast, vast majority seeming to be conference proceedings and "collections of technical articles". Rather than manually curate all of them, I searched "AIAA journal" which yielded 7 entries (see search term: here). Third: ACM also seemed to host a massive diversity of major or niche journals. This was readily reflected in their very diverse range of:

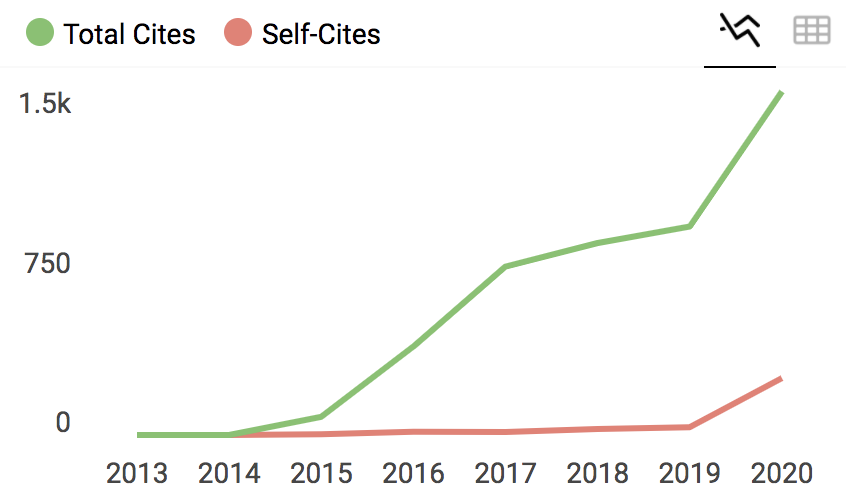

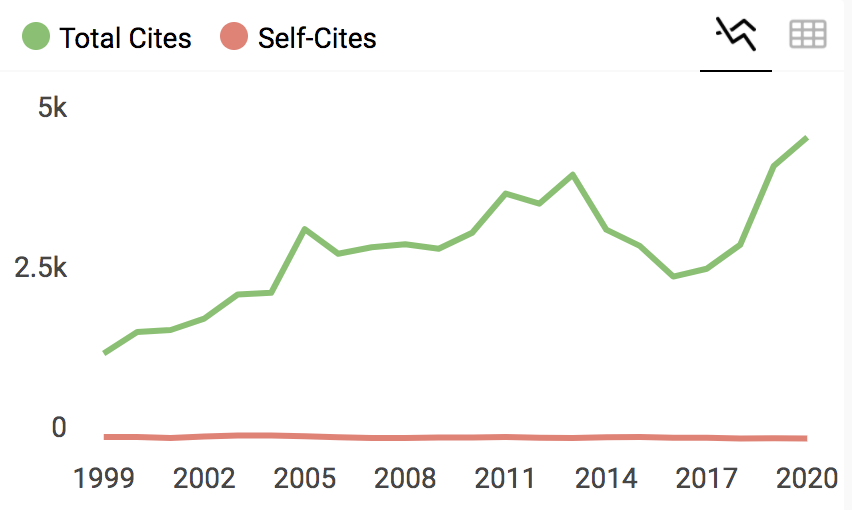

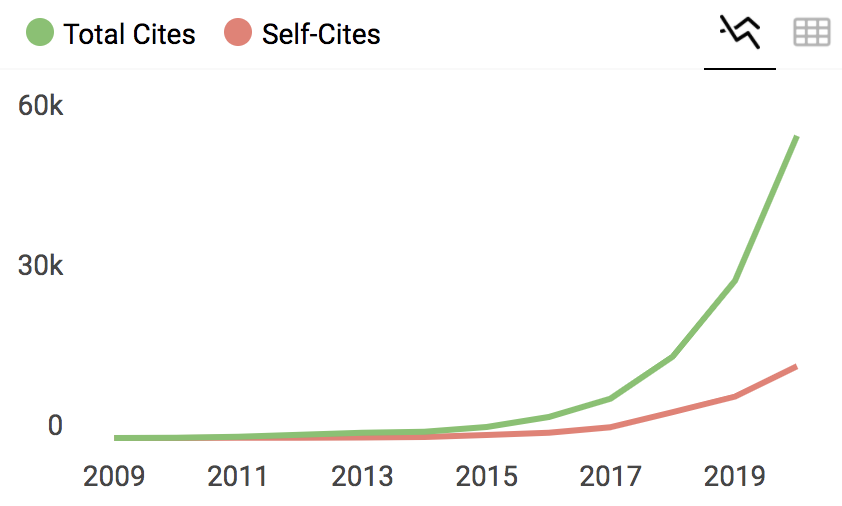

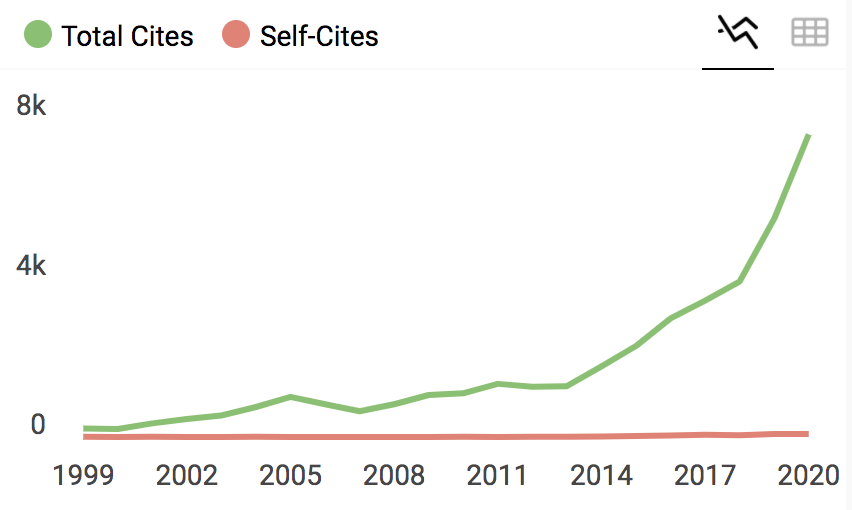

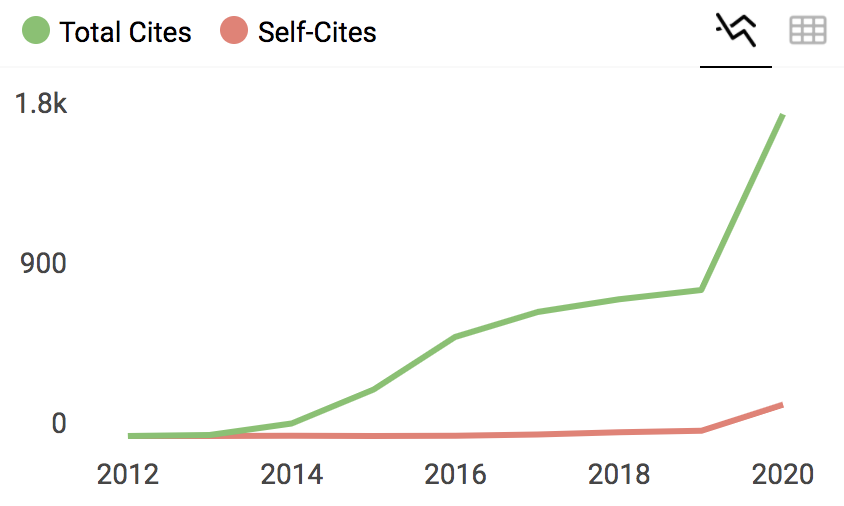

As you can see, the standard deviation of the overall IF/SJR is high, but also the variation in both the underlying IF and SJR data in the ACM pool was almost as large as the averages themselves! By comparison, MDPI IFs had an average of 3.98±1.18, and SJRs 0.88±0.37. So not only do ACM journals have high IF/SJR, but the the independent spreads of each journal also varied drastically. I didn't censor any ACM journals because of this, but felt it was worth noting. Perhaps this reflects a signal expected of publishing niches? I also had a cursory glance through ACM self-citation statistics (there are year-by-year charts available on Scimago), which suggested they do not, in fact, have a very high self-citation rate when you don't count conference proceedings - at least not when citing their same exact journal. Figure: self citations over time. Left) self-citations of the ACM journal "Communications of the ACM" (IF/SJR = 4.81). Self-citations/Total citations = 23/4350 = 0.5%. Right) self-citations by MDPI journal "Sustainability" (IF/SJR = 5.31). Self-citations/Total citations = 12367/52097 = 23.7%. The trend of Sustainability citing itself began around 2018, one year prior to Copiello (2019) [4] calling Sustainability out for questionable practice. Note that Sustainability citing another MDPI journal here is not counted towards self-citation rate (same goes for Communications of the ACM). Figure: more self citations over time. Left) self-citations of the ACM journal "Computing Surveys" (IF/SJR = 4.95). Self-citations / Total citations = 71/ 6978 = 1.0%. This may be an example of niche effect inflating the IF/SJR. Middle) self-citations by MDPI journal "Vaccines." (IF/SJR = 3.41). Vaccines had a self-citation rate of 246/1485 = 16.6% in 2020, which you can see was a striking departure from their previous years. And again, that's just Vaccines articles that cite Vaccines, not MDPI as a whole. Right) self-citations by MDPI journal "Pathogens." (IF/SJR = 3.55). Again, a spike in self-citations occurs in 2020, though to a lesser extent (162/1664 = 9.7%). 9.7% is not yet problematic in my books, but again, that's just Pathogens articles citing other Pathogens articles, not MDPI as a whole. So a Vaccines article citing a Pathogens article would not register here as a self-citation. I suspect MDPI included conference proceeding collections in their original analysis, giving the false impression that these groups had extremely high self-citation rates. Thus... even MDPI's examples of high self-citers likely aren't genuinely higher self-citers; MDPI really is in a league of its own. Edit Dec 12th: I figured I should put the formulae for IF and SJR here so folks with algebraic minds can judge for themselves how the comparison holds up. Basically IF is a simple metric of total citations received over total publications put out. SJR is a much more sophisticated process that evaluates not only a journal's total citations, but also the diversity of where those citations are coming from. It iterates through networks until it converges on a specific prestige value. Then it takes that prestige and divides it by the total number of articles put out by a journal. So the big difference is that SJR doesn't just look at total citations, but it normalizes the citation count by the diversity of who is doing the citing.

Comments are closed.

|

AuthorMark Archives

March 2024

Categories |

||||||

RSS Feed

RSS Feed