|

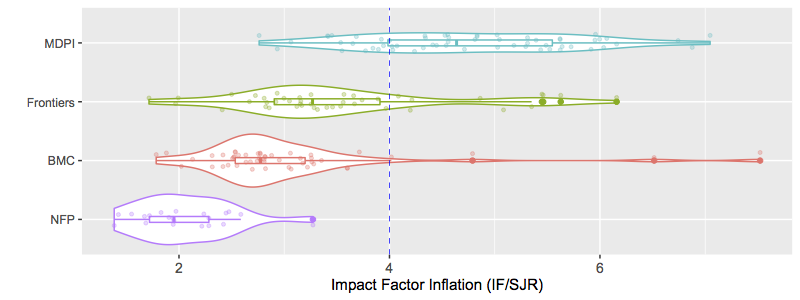

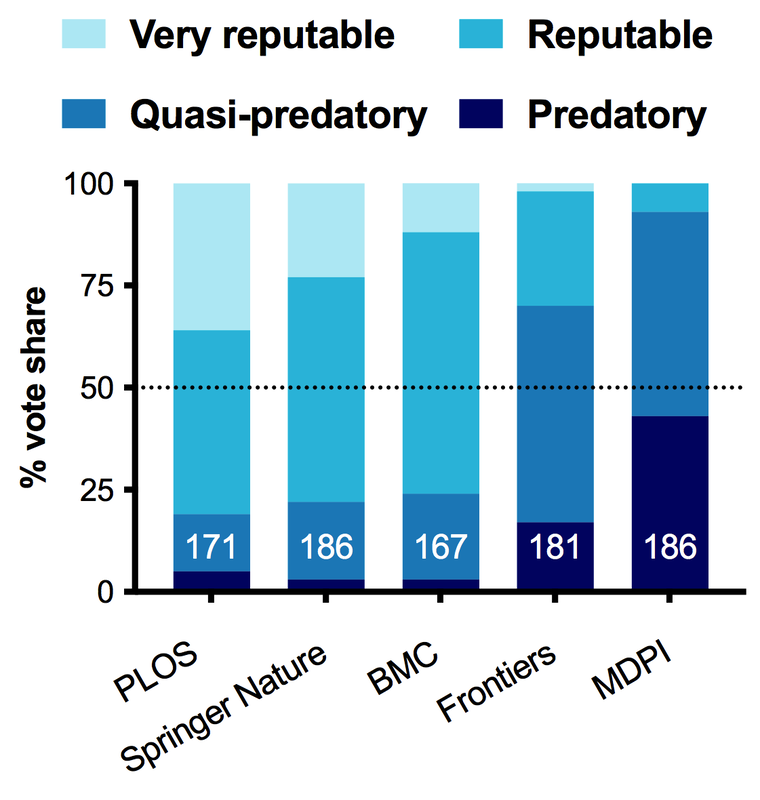

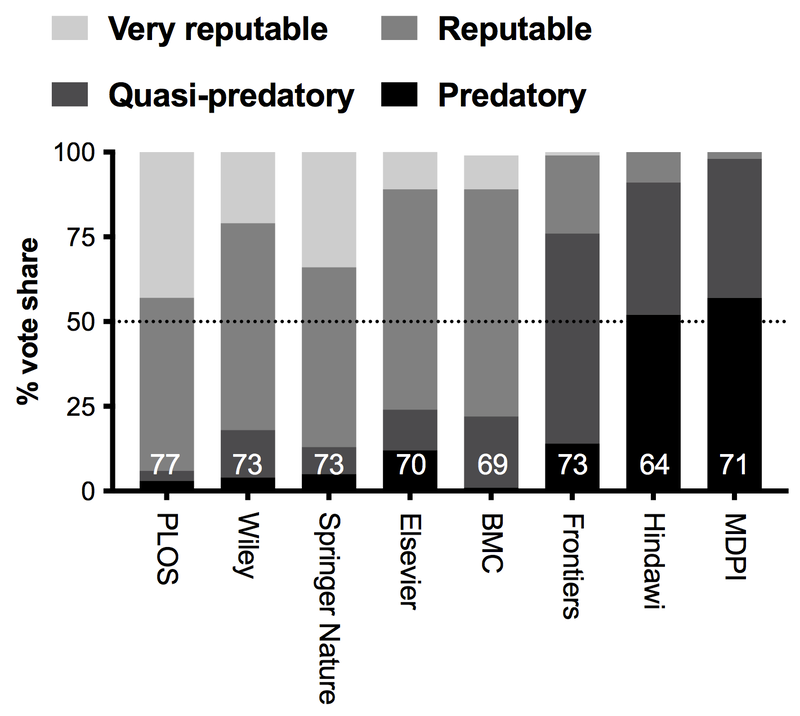

Reading time: 8-10 minutes This blog has been critical of MDPI in the past. That came about honestly: in 2021 I looked at how inflated a journal's Clarivate Journal Impact Factor was relative to a metric that has built-in rank normalizers, the SciMago Journal rank. In that analysis, MDPI was far and away the most severe in terms of Impact Factor Inflation, having significantly different citation behaviour compared to all not-for-profit publishers, but also compared to for-profit Open Access publishers like BioMed Central (BMC) and even Frontiers Media ("Frontiers in ____").  Impact Factor Inflation is a metric of anomolous citation behaviour. It reveals publishers whose Clarivate Journal Impact Factors (IF) are much higher than expected if one normalizes for the network of journals citing that publisher (using Scimago Journal Rank = SJR). The SJR formula does not reward self-citation, or receiving many citations from only a small pool of journals. Thus when a journal has a very high Impact Factor compared to SJR (suggested litmus test = 4x higher), it reveals when that Impact Factor has been inflated by self-citation, or small self-citing circles of authors/journals. I followed that analysis with a simple poll asking Twitter users what their opinions were of various publishers. I repeated that poll in 2023 on both Twitter and Mastodon getting basically the same result (if anything, more settled into camps): nearly everyone labelled MDPI a somewhat or outright "predatory" publisher. Opinions polls of academic publishers asking "What do you think of publisher ___?" conducted in 2021 and 2023 on Twitter and/or Mastodon.

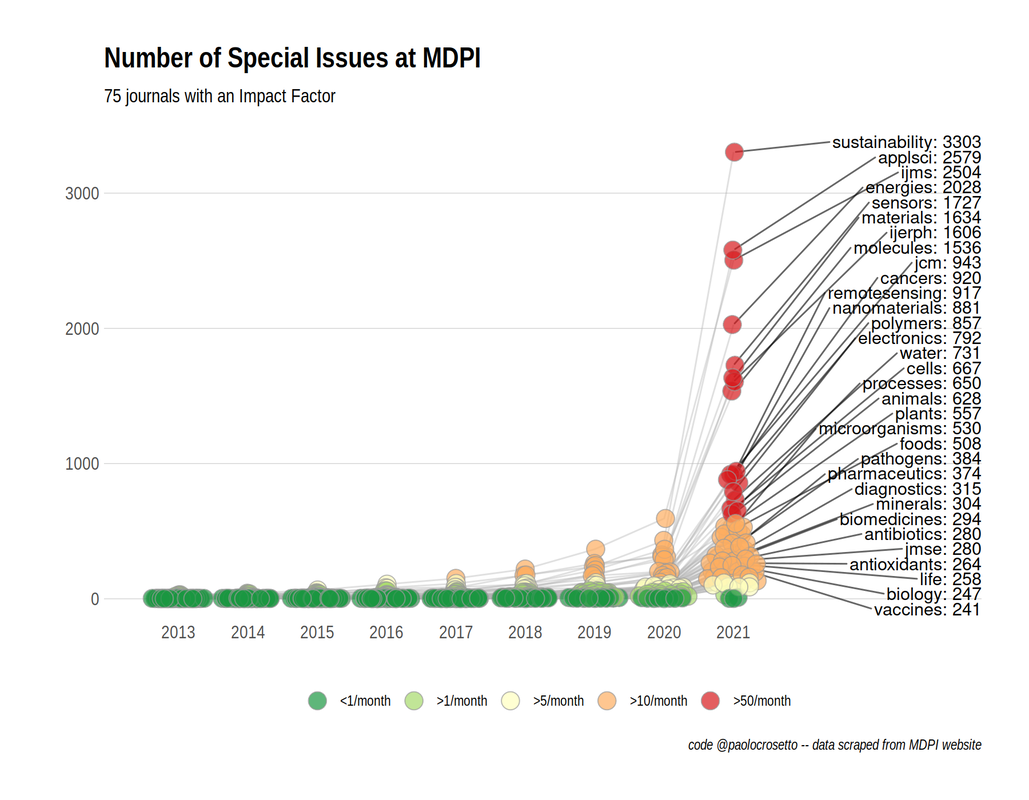

Around the same time, Paolo Crosetto wrote a fantastic piece on MDPI's anomolous growth of Special Issues. Year-after-year growth of Special Issues exploded between 2020 and 2021, going from 6,756 in 2020 to 39,587 in 2021. The journal Sustainability has been publishing ~10 special issues per day: and keep in mind a special issue is often comprised of ~10 articles. As Crosetto put it: "If each special issue plans to host six to 10 papers, this is 60 to 100 papers per day. At some point, you are bound to touch a limit – there possibly aren’t that many papers around... That’s not to talk about quality, because even if you manage to attract 60 papers a day, how good can they be?”

Not-so-special issuesThe reason the special issue model of publishing exists is because there are always ideas floating around at conferences and in research circles about what direction to take the field. These ideas are often an individual's synthesis of the literature, and their understanding of both unpublished data and why contradictory results in the published literature might exist. Special Issues, at their inception, were an opportunity to give authors a chance to put forth ideas that weren't built on as solid a data foundation, but were worthy topics to bring to the research community. They were article collections to get the field to pause and digest the many papers that were recently published, and figure out what direction(s) were going to be most fruitful moving forward. Publishers like MDPI (and others) abused that loophole. They created a publishing model built off appealing to the publish or perish mentality, and the need for researchers to buff out their CVs with journal articles. So they created a Ponzi-esque system where they aggressively recruited researchers to host special issues (i.e. act as editors for free), petitioning for articles from their network of colleagues. The issue isn't in the model: it's in the frequency. At some point, all the burning questions have been discussed, and the field is waiting for new data, not re-hashings of the same review articles that were written just a year ago. In the absence of new ideas, authors instead began using Special Issues as outlets for research that wasn't quite ready for publication. Knowing that Special Issues are under less scrutiny, it became common for authors to publish work that could have used an extra experiment, or some more time for thought and interpretation. But hey... publish or perish. I say this having been first author on a publication in a special issue in Frontiers in Immunology, where myself and my co-author both took advantage of the invitation to submit observations we'd made that didn't really fit in any other article. It's a publication where we got two solid peer reviewers, but a third (which is a standard more rigorous journals often strive to acheive) might have caught one of the key inaccuracies of our discussion: We put forth the idea that plant-feeding ecologies were microbe-poor by virtue of the host plant's immune system. The idea was that plant-parasite species might have reduced pressure on their immune system by outsourcing microbe suppression to host plants, which would explain why many immune genes are pseudogenized in those plant-feeding parasite species.

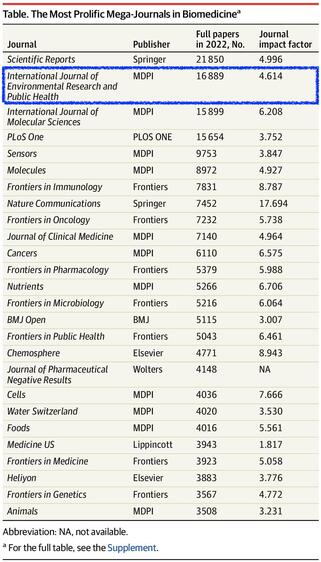

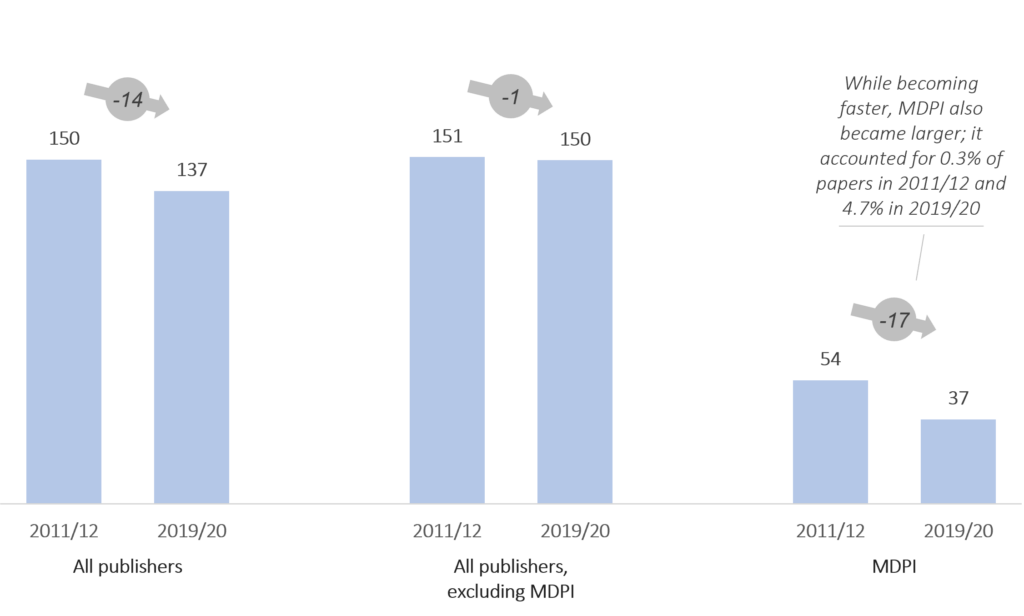

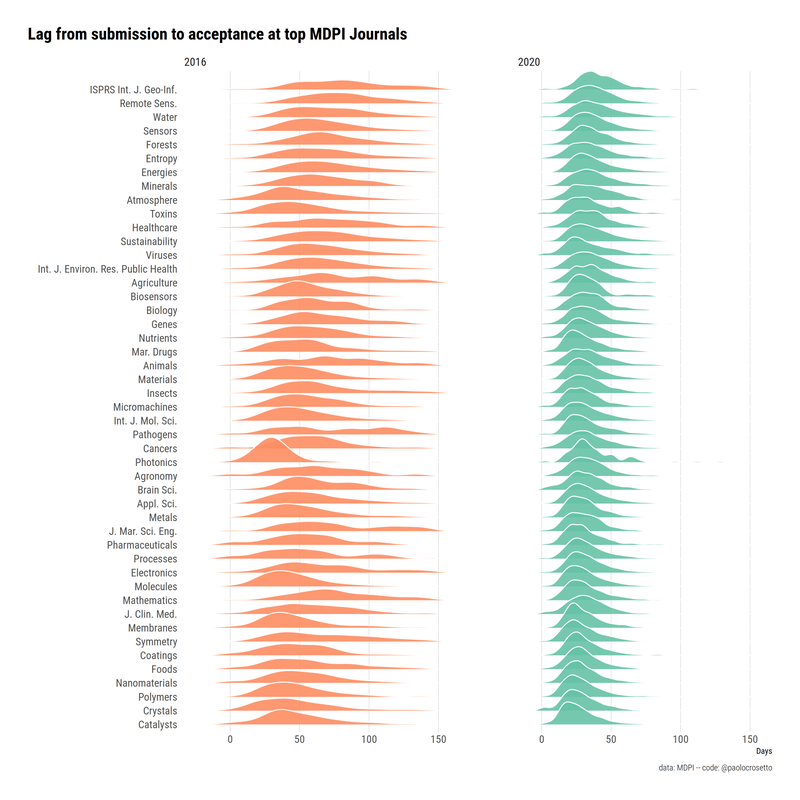

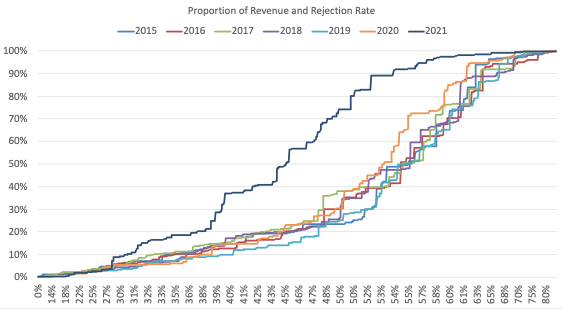

And that's the critical flaw with the way the MDPI model abuses the Special Issue loophole: ideas that aren't ready for publication get swept through peer review anyways because everyone understands that special issues are treated with less scrutiny. And to be perfectly clear, MDPI very intentionally kept it that way: article processing times in MDPI are half or less that of other publishers. MDPI's lax peer review is so anomolous, Christos Petrou had to remove MDPI from his analysis of publisher turnaround times in order to paint a meaningful picture of the data. Analyses by Christos Petrou and Paolo Crosetto both found that MDPI had massively coordinated a reduction in article turnaround time in recent years, with just ~37 days from 1st submission to articles being accepted. Edit 25/03/2023: beyond processing articles quickly, rejection rates have also decreased. Dan Brockington's analysis in Nov 2022 shows how this disproportionately increased MDPI revenues for 2021. And so there is a lot to discuss moving forward with how to deal with MDPI. There have already been at least two world-ranked universities whose science faculties have rescinded support for MDPI, telling their researchers that articles published in MDPI would not be considered towards their academic CV. It is high time that we sit down and consider whether such explosive publishing output is really advancing science meaningfully. Indeed, the process is not harmless: not only because it takes research funds and puts them towards article processing fees of articles that are of questionable necessity... but it also dilutes the literature with hundreds of articles that could have used more care and attention before final publication. Paolo Crosetto questioned whether there are even enough genuine papers in-progress to fill the demands of journals like Sustainability, who publish hundreds of articles per day through special issues; and I agree. It's very reminiscent of "salami publishing," where one publishes piecemeal results one-by-one rather than waiting for a complete story that has had loose ends tied off. How unique is MDPI in this round of Clarivate cuts? Table: Ioannidis et al. (2023). The Rapid Growth of Mega-Journals Threats and Opportunities. doi:10.1001/jama.2023.3212 Table: Ioannidis et al. (2023). The Rapid Growth of Mega-Journals Threats and Opportunities. doi:10.1001/jama.2023.3212 It is therefore not surprising to those of us that have been following MDPI's trajectory over the last few years that MDPI's flagship journal IJERPH finally got burned: this really could have been any MDPI journal, and for all we know more delistings are coming. Clarivate has said they have only finished analyzing ~50 of the ~500 or so journals of concern that came up in their 2023 round of considerations. So MDPI could have more journals on the chopping block. It should also be said this series of cuts isn't unique to MDPI. Another publisher seriously affected by this round of delistings is Hindawi, who had ~20 journals delisted from Clarivate in early 2023. That may make MDPI's two delisted journals seem small by comparion, but to emphasize how many articles delisting IJERPH affects: IJERPH publishes more articles per year than PLOS One, and is second only to Springer Nature's mega-journal Scientific Reports in terms of total article output among biomedicine-related journals. What's next then?This is really a key turning point for MDPI: for better or worse, the current publishing system places an immense amount of value in Clarivate and Web of Science as an authority on publisher integrity. In some ways, the damage is already done: even if MDPI addressed Clarivate's concerns and got IJERPH reinstated to Web of Science, who would really look at IJERPH the same now? Especially when so many other comparable publishers are available (such as Frontiers Media, as is apparent from the Table above). Per my IF/SJR analysis, per Christos Petrou's article turnaround time analysis, and per polls of public opinion, Frontiers has somehow maintained a reputation that is noticeably different in citation behaviour and public perception, but still keeps reasonable article turnaround times and hasn't earned the ire of the entire research community (yet?). MDPI's ability to sell itself as a highly-respected publisher has taken a serious blow with this delisting, particularly as the same behaviours that got IJERPH delisted are copy/pasted across all MDPI journals. However MDPI could still right the ship with a serious overhaul to its business approach, and its editorial practice.

However... that's asking a lot of a publisher that spent the last decade coordinating a massive overhaul to its structure to reach this exact point. For now, I will continue to boycott MDPI requests to review, submit articles, or host special issues (of which I've had several in the past few weeks alone). While I'd encourage all readers to join me, the power we give to publishers like MDPI, or to authorities like Clarivate, is the power of collective action. Either we follow MDPI's example, or we follow the example of world universities and Clarivate in removing MDPI from our personal list of reputable publishers. Cheers, Mark Hanson

23 Comments

Daniel Stan

3/26/2023 12:28:50 pm

Science in IJERPH is top notch. This doesn't change anything to be honest. Peer review is also thorough as well. IJERPH is one of the most cited journals in the world and will continue to be so. Anyone who's a scientist understand that the vast science published in IJERPH is from promising scientists across the world. Scientists publishing in "top" journals even borrow ideas from most MDPI journals. This article is informative but it will not change publication culture because MDPI is here to stay for all PhD students and early career researchers who constitute the bulk of researchers doing the actual work.

Reply

Iqbal

3/26/2023 04:51:44 pm

IJERPH and many other journals of mdpi that publish more than 1000 paper a month are not more than a trash. We all know how the citations of these journals increase. This should stop otherwise research will be restricted to publications only.

Reply

Amir Hossein Ahmadi

3/26/2023 11:55:17 pm

I think so too.

Reply

Tesfaye Senbeta

3/26/2023 02:29:02 pm

I agree that researchers must boycott journals that treat peer reviews as a mere formality and use IF as a measure of quality by systematically falsifying them. In addition to boycotting publishers, we should also refuse to cite journals published by publishers that disregard academic integrity.

Reply

Kaal

3/26/2023 09:26:34 pm

Clavirate delisted 82 journals, only 2 of which were from MDPI. Hindawi alone had 15 journals. Other renowned publishers like Springer, Elsevier, Sage, Taylar and Francis, Wiley, were all listed in this group. Why the emphasis on MDPI? Just curious

Reply

I guess re-read "How unique is MDPI in this round of Clarivate cuts?"

Reply

Morgado Dias

4/4/2023 03:04:48 pm

I have published in many places from IEEE, Springer, Elsevier, Wiley and MDPI. While I can agree with the concerns since not everything runs smoothly in MDPI (I am member of the editorial board of a couple of journals and I suggested some improvements), I have seen similar problems in other publishing houses, sometimes more deeply.

Reply

Amir Hossein Ahmadi

3/26/2023 11:49:50 pm

I have a question.

Reply

Mark

4/4/2023 12:41:49 pm

Hey, sorry this comment took so long to approve. It got caught in the spam folder for some reason... Will check the spam more in future.

Reply

Andreas Weiermann

4/2/2023 12:51:40 pm

Please continue in writing about these matters. This is a great service for science in general.

Reply

Mark

4/4/2023 08:34:49 am

Much appreciated :)

Reply

Simona

4/4/2023 04:58:50 am

And still, I found many great articles in the journal so I appreciate the authors not the industry which to be fair was encouraged by universities that put pressure on researchers to publish more and more. You cannot advance in your career without writing and publishing lots of articles. There is no industry without demand. MDPI is business. If you want to publish something great with recent results, 1-2 years to be published is too long. Till then, other researchers will probably publish similar results and your will definitely be obsolete. I honestly blame universities for pressuring professors in this madness of who publishes most will get a promotion. Otherwise, you are less. Does not matter you teach students something.

Reply

Mark

4/4/2023 08:34:26 am

One must disconnect the work from the publisher. That goes both ways. The work will always stand on its own merits, whether it is a free-to-post preprint, published in MDPI, published by PeerCommunityIn (a diamond open access service available to all, with no fees to authors or readers), or published in any journal really...

Reply

MOrgado Dias

4/4/2023 06:00:15 am

I would like to know why my comment was not published. Did I hit a nerve?

Reply

Mark

4/4/2023 08:26:57 am

Nope, it's because you only waited 1h2m (assuming you're "Simona").

Reply

morgado Dias

4/4/2023 12:08:44 pm

I wrote a long answer to Kaal, about 3 days ago. It was not published.

Mark

4/4/2023 12:31:18 pm

Oh... weird. No I don't see that in the pending comments section. Which means I guess it did not successfully submit? Sorry, feel free to re-write it and I will approve it when I see it pending.

Simona

4/4/2023 10:47:11 pm

Just saying you cannot publish where you want. Universities impose the conditions, fast and indexed in WoS, in Q1 or Q2. So, you choose what your employer wants you to choose. I wrote a few articles for IJERPH and others but the reviews were very competent. 2-3 rounds of reviews and sometimes 3-4 reviewers. The articles without a model were rejected by the editor, so without a strong methodology my articles would not have passed initial screening. Also, being myself a reviewer for them, I found real gems, very inspiring articles. I rejected articles which did not meet the criteria. MDPI is a business and this model is hard to accept by some. They have an entire network of reviewers and editors answering promptly. For each review you as a reviewer have 10 days so for me it is enough to read and make my comments. After that, the author has another 10 days. And this is only for the first round. I reviewed three times a paper till the final decision of rejection was made. Waiting 2 years to publish your research in journals that do not invest in developing their networks of reviewers is not acceptable. And the examples mentioned with Preprints or others are unacceptable too because universities do not recognize them and you are forced to choose a business model like MDPI. I do not have a pro-MDPI opinion, just stating that quality and business and research can go hand in hand. And so far, they are close to this. Personally I did not see bad articles published by MDPI. Any analysis should take into account quality not just how fast or numerous the articles are. They are a business not for profit (even if some journal have their own foundations supporting research like Sustainability), they have many many people working for them, from reviewers to editors.

Reply

Mark

4/5/2023 01:52:25 am

Annoying... I wrote a long comment and it seems to have been lost/never posted. Not sure what's going on... I've turned off comment moderation for now. With the rate of comments, probably more work to approve real ones than it is to just delete spam later.

Reply

Simona

4/5/2023 04:01:00 am

Thanks for your reply. Not a niche but not so complicated maybe as the domains you mentioned. Economics, public policies, this is my area of expertise. Honestly, I would prefer a system in which professors can choose to teach or do research. In my country you need to publish otherwise you cannot teach :) My dilemma and very far of your subject. I judge article not publishers, I will continue to do that. And I found real gems in MDPI in my domain. You can read the entire paper. For example, authors can choose to publish review reports. I always said yes, I am proud of my work as both a reviewer and author for MDPI and other journals too. I am disappointed by other journals because time is sensitive in research. The other can do more. And I am a reviewer, for free, for many journals, Springer, Taylor and Francis, Elsevier. They do not take much time because you have more time... they take time being understaffed. You, as an author, receive an email that they received your paper and after 3-4 months they say they sent your paper to review. So there is a difference: 6-12 moths for publishing does not equal 6-12 months review! Just less efficient publishing houses, less reviewers, less marketing. We can write back and forth but I maintain my opinion. I did not find bad articles in my field published by IJERPH. In my field, you have to create econometric models, to use surveys, apply different models and so on. Until I did not invest in myself to learn to do this, I never succeeded to publish before being rejected by MDPI journals I sent my articles to. And I am not at the beginning of my career. I publish in Springer (books) but universities do not recognize Springer books so much. So nothing is black or white. This will be my last comment. Wish you and your readers all the best.

MA

4/5/2023 08:24:50 am

IJERPH is not a MEGA journal. It's a simple key to read a lot of analysis, posts and mix of news on MDPI like this: no references, no deeper analysis, no library and information science topic knowledge....

Reply

Morgado Dias

4/5/2023 01:31:07 pm

Wow, where did you get that from? Not what I saw as SI Editor and not the other participants view.

Reply

Mark

4/6/2023 01:55:20 am

https://www.mdpi.com/journal/ijerph

Reply

Your comment will be posted after it is approved.

Leave a Reply. |

AuthorMark Archives

March 2024

Categories |

RSS Feed

RSS Feed